OpenAI has undoubtedly captured the world's attention with its impressive capabilities and diverse range of applications. However, we were curious about what differentiated OpenAI from other AI models. Seeking to get to the bottom of it, we embarked on a mission to interview a few of these prominent providers and shed light on their offerings: RevAI (Speech-to-Text), NeuralSpace (Text analysis), and Base64.ai (Document parsing).

Each of these models excels in specific domains, offering unique features and benefits that set them apart from OpenAI. Let's explore how they position themselves in the competitive landscape of artificial intelligence.

1. Rev (Speech-to-Text)

We had the opportunity to interview Rev, a leading provider in the field of Speech-to-Text (STT) technology. According to their team, Rev sets itself apart from OpenAI with the following features:

Speed Processing & Accuracy

Rev prides itself on lightning-fast processing times, allowing users to transcribe large volumes of audio content swiftly. This advantage is particularly valuable for organizations dealing with high-demand transcription tasks or time-sensitive projects. They also win on quality: Rev ASR handles accents and difficult audio far better than others.

Async & real-time APIs

While the Rev basic async speech API remains the primary choice for customers, they also make use of the real-time API for live streaming, which includes precise and timely captioning capabilities. Additionally, customers have the option of utilizing human transcription services provided by Rev.

Advanced STT Features

Rev goes beyond basic speech-to-text functionality by offering advanced features such as speaker diarization, which enables users to identify and differentiate between multiple speakers in the audio.

They also provide a profanity filter as well as customizable punctuation options for more precise transcription formatting.

2. NeuralSpace (NLP)

Our conversation with NeuralSpace shed light on their approach to Natural Language Processing (NLP) and language-specific solutions. Here's what they had to say about positioning themselves against OpenAI:

Trained on Specific Data

NeuralSpace prides itself on training its models on targeted datasets, including non-public information. Unlike OpenAI, which primarily relies on publicly accessible data from the World Wide Web, NeuralSpace leverages proprietary and specialized datasets.

This approach enables them to provide better performance and accuracy when processing text from specific regions, such as Asia, the Middle East, and Africa. By catering to linguistic nuances and cultural context, NeuralSpace delivers superior results in these regions.

Here is what Felix Laumann, CEO of NeuralSpace has to say on the matter:

“OpenAI has done an outstanding job on utilising publicly accessible information (from the WWW) in the form of text and images to train a model that disseminates that information through a human-like conversation. […]What OpenAI cannot train their LLM on, or at least they haven't done it yet, is information that is not publicly available on the WWW.

Many industry experts call OpenAI a company working on the "foundations" of LLMs, whereas NeuralSpace works on the level of "applications" of LLMs to certain business use cases.

Focusing on Specific Languages

NeuralSpace concentrates its efforts on developing NLP and voice solutions in languages prevalent in Asia, the Middle East, and Africa. While OpenAI's GPT-4 supports multiple languages, studies have shown that its capabilities are stronger in English.

By focusing on specific languages, NeuralSpace aims to provide more robust and accurate language processing capabilities tailored to the regions they serve.

3. Base64.ai (Doc Parser)

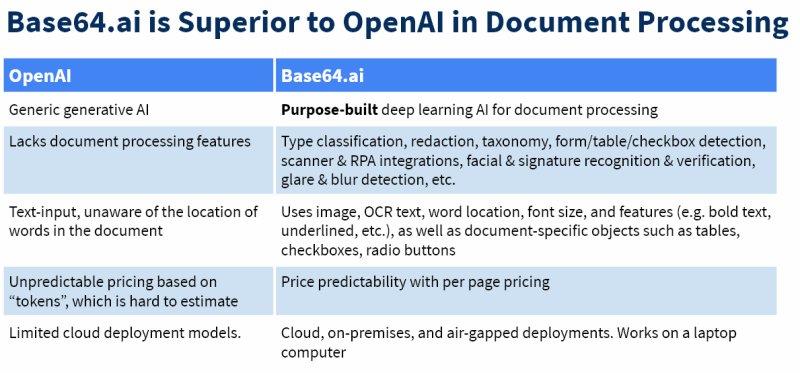

During our exploration of document parsing solutions, we engaged with Base64.ai. They highlighted the following unique aspects of their platform compared to OpenAI:

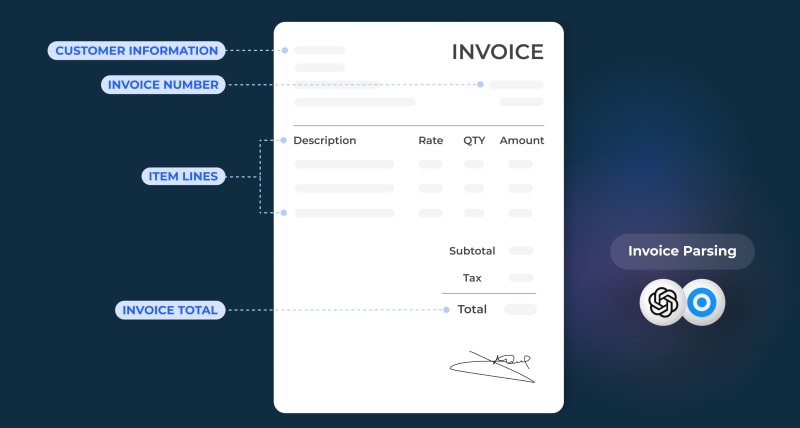

Processing Documents, not just Text

Unlike OpenAI, which primarily focuses on text processing, Base64.ai specializes in document parsing. Their expertise lies in extracting structured information from various document formats, including PDFs, images, and scanned files.

Plus, they offer a variety of features that aren't available in Open AI. Those include OCR, form, table, checkbox detection, document type classification, image quality verification, integration with 400+ third parties, human-in-the-loop validation, custom taxonomies, and cloud & on-premises deployment options.

Document Processing is particularly valuable for organizations dealing with substantial amounts of document-based data.

Simple Pricing Structure

Base64.ai adopts a per-page pricing model, which may appear easier and more cost-predictable than Open AI's token (number of words) based pricing. This structure allows customers to pay only for the pages they want to be processed, potentially making it also more cost-effective for specific use cases.

Conclusion: what differentiates Open AI from other AI models?

Long story short, while OpenAI is widely recognized for its impressive capabilities and versatility, its broad scope can sometimes result in certain limitations. This is where specialists come into play, offering their expertise to address specific needs and fill in the gaps.

OpenAI's general-purpose approach may not always excel in specialized domains or niche applications, where specialists can bring domain-specific knowledge and fine-tuned solutions.

By combining the strengths of OpenAI's powerful models with the specialized skills of domain experts, a more comprehensive and tailored solution can be achieved, ensuring optimal results across a wide range of contexts.

Access specialized AI models on Eden AI

If you're looking to explore specialized AI models, Eden AI provides a platform where you can give them a try.

With Eden AI, you have the opportunity to access and experiment with a diverse range of specialized AI models tailored for specific domains and use cases.

Whether you require industry-specific solutions, advanced natural language processing, computer vision applications, or other specialized AI capabilities, Eden AI offers a curated selection of models to cater to your unique needs:

There are many reasons for using multiple AI APIs :

Fallback provider is the ABCs.

You need to set up an AI API that is requested if and only if the main AI API does not perform well (or is down). You can use the confidence score returned or other methods to check provider accuracy.

Performance optimization.

After the testing phase, you will be able to build a mapping of AI vendors' performance that depends on the criteria that you chose. Each data that you need to process will be then sent to the best API.

Cost - Performance ratio optimization.

This method allows you to choose the cheapest provider that performs well for your data. Let's imagine that you choose Google Cloud API for customer "A" because they all perform well and this is the cheapest. You will then choose Microsoft Azure for customer "B", a more expensive API but Google performances are not satisfying for customer "B". (this is a random example)

Combine multiple AI APIs.

This approach is required if you look for extremely high accuracy. The combination leads to higher costs but allows your AI service to be safe and accurate because AI APIs will validate and invalidate each other for each piece of data.